Kafka components one by one

Courtesy :

Stéphane Maarek and Learning Journal

mkdir ~/Downloads

curl "https://www.apache.org/dist/kafka/2.1.1/kafka_2.11-2.1.1.tgz" -o ~/Downloads/kafka.tgz

mkdir ~/kafka && cd ~/kafka

tar -xvzf ~/Downloads/kafka.tgz --strip 1

Partition:

A Topic can be broken in to multiple partition, so that each partition can reside in a separate Broker instance, thus achieving parallelism for that specific topic (KPI).

New message written to a topic:

Kafka:

Decides which partition will the message go, kind of load balancing,

Que:

How does it knows, which partition is free ? or it's offset is not full(12) ?

Even of you parallel produce message in 2 partition, the bottleneck can still be in consumer side?

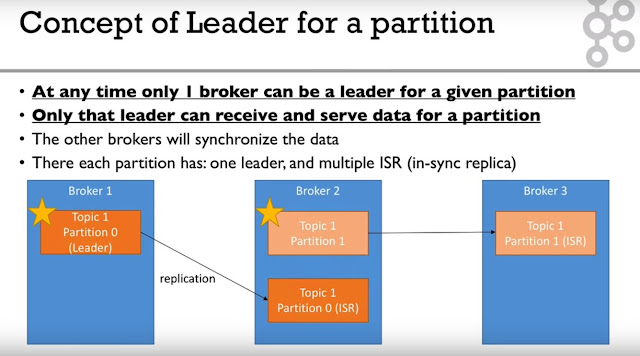

4. Topic Partition replication and leader

5. Load distribution and acknowledgment

Producer just send data to "BrokerHost:TopicName"

Kafka:

Will decide, which broker instance and which partition of that Topic(KPI ), the message should go.

Ack, explained below

Producer: Message Key

If you want a guarantee/sequencing of you msgs, so that you are not at the mercy of kafka broker logic to chose random partition number for your produced message and want all your messages to go to same partition, thus guarantee the sequencing i.e. offset numbering for your messages,

then with your message, append same message key all the time.

Consumer groups

To enhance the consuming speed/parallelism from a topic, multiple consumer choose to take care of a specific partition of a topic, thus, if we have 2 partition , we create two consumer and give one one partition id to each consumer, hence parallel consumption.

Load balancing

Fault tolerant

Producer load balance : With multiple partitions

Fault tolerant with replication factors

Start Kafka Broker:

./bin/kafka-server-start.sh ./config/server.properties

[KafkaServer id=0] started (kafka.server.KafkaServer)

by changing the the kafka server port in ./config/server.properties, we can start as many broker as we want.

Create a topic :

Installation

mkdir ~/Downloads

curl "https://www.apache.org/dist/kafka/2.1.1/kafka_2.11-2.1.1.tgz" -o ~/Downloads/kafka.tgz

mkdir ~/kafka && cd ~/kafka

tar -xvzf ~/Downloads/kafka.tgz --strip 1

Partition:

A Topic can be broken in to multiple partition, so that each partition can reside in a separate Broker instance, thus achieving parallelism for that specific topic (KPI).

New message written to a topic:

Kafka:

Decides which partition will the message go, kind of load balancing,

Que:

How does it knows, which partition is free ? or it's offset is not full(12) ?

Even of you parallel produce message in 2 partition, the bottleneck can still be in consumer side?

4. Topic Partition replication and leader

5. Load distribution and acknowledgment

Producer just send data to "BrokerHost:TopicName"

Kafka:

Will decide, which broker instance and which partition of that Topic(KPI ), the message should go.

Ack, explained below

Producer: Message Key

If you want a guarantee/sequencing of you msgs, so that you are not at the mercy of kafka broker logic to chose random partition number for your produced message and want all your messages to go to same partition, thus guarantee the sequencing i.e. offset numbering for your messages,

then with your message, append same message key all the time.

Consumer groups

To enhance the consuming speed/parallelism from a topic, multiple consumer choose to take care of a specific partition of a topic, thus, if we have 2 partition , we create two consumer and give one one partition id to each consumer, hence parallel consumption.

Load balancing

Fault tolerant

Producer load balance : With multiple partitions

Fault tolerant with replication factors

CONSUMER

Avoid duplicate process of Message by Many Consumers of same group:

One topic can only be read from one Consumer

INSTALATION AND BROKER Configurations

Start zookeeper

./bin/zookeeper-server-start.sh ./config/zookeeper.properties

INFO [ZooKeeperClient] Connected. (kafka.zookeeper.ZooKeeperClient)

INFO [ZooKeeperClient] Connected. (kafka.zookeeper.ZooKeeperClient)

Start Kafka Broker:

./bin/kafka-server-start.sh ./config/server.properties

[KafkaServer id=0] started (kafka.server.KafkaServer)

by changing the the kafka server port in ./config/server.properties, we can start as many broker as we want.

Create a topic :

./bin/kafka-topics.sh --zookeeper localhost:2181 --create --topic FM --partitions 1 --replication-factor 1

Describe topic

./bin/kafka-topics.sh --zookeeper localhost:2181 --describe --topic FM_TOPIC

Topic:FM_TOPIC PartitionCount:1 ReplicationFactor:1 Configs:

Topic: FM_TOPIC Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: FM_TOPIC Partition: 0 Leader: 0 Replicas: 0 Isr: 0

List brokers

./zookeeper-shell.sh localhost:2181 <<< "ls /brokers/ids"

[0, 1]

Console consumer

./kafka/bin/kafka-consoconsumer.sh --bootstrap-server 107.108.52.243:9092 --topic FM --from-beginnin

Console producer

./kafka/bin/kafka-console-producer.sh --topic FM --broker-list 107.108.52.243:9092

Issues :

Windows produced message doesnt reach to linux topic

solution :

User FQDN in "bootstrap.servers" and make sure the correct entries in /etc/hosts/ of windows and linux machine

/etc/hosts/ (linux)

C:\Windows\System32\drivers\etc\hosts(windows)

107.108.52.243 www.sribldap1.com

Producer code :

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, www.sribldap1.com:9092);

Check of message has reached to broker

1. find the log base directory from kafka server.property (./kafka/config/server.properties)

log.dirs=/var/lib/kafka/data/topics

2. in that folder, check the folder with "topicName-<partiotionNum>"

3. In that folder a file name --> somenum.log, --> tail it.

LOGS in Kafka

two type of logs in apache kafka

1. for storing all topics actual message,

configured via kafka/config/server.properties i.e. log.dirs

if not specifies, kafka takes as /tmp/kafka-logs

2. Storing all kafka internal application logs, info, error, debug

configured via -Dkafka.logs.dir=/some/path , option while starting kafka.

which is then used by log4j.properties to create different types of log files like controller.log etc

My question is, if we have missed to set specifying application log directory(i.e kafka.logs.dir) during kafka startup, what is the default application directory used by kafka ?

Ans:

$kafka_Home/kafka/logs/

server.log will store most of the messages

listeners=PLAINTEXT://myhost:9092,OUTSIDE://:9094

PLAINTEST : is just name of lister

myhost: is the host and port where broker bind itself, when it starts, that mean, it will listen to this IP/ethe0 interface and listen on this this 09092, if somebidy is sending any traffic, then it will process it, else it will not.

listener:

For internal Broker to Broker or zookeeper communication

advertised.listeners:

For external point of contact, like in cloud env, this will be external exposed IP and port, like NodePort IP:Port

Console consumer

./kafka/bin/kafka-consoconsumer.sh --bootstrap-server 107.108.52.243:9092 --topic FM --from-beginnin

Console producer

./kafka/bin/kafka-console-producer.sh --topic FM --broker-list 107.108.52.243:9092

Issues :

Windows produced message doesnt reach to linux topic

solution :

User FQDN in "bootstrap.servers" and make sure the correct entries in /etc/hosts/ of windows and linux machine

/etc/hosts/ (linux)

C:\Windows\System32\drivers\etc\hosts(windows)

107.108.52.243 www.sribldap1.com

Producer code :

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, www.sribldap1.com:9092);

Check of message has reached to broker

1. find the log base directory from kafka server.property (./kafka/config/server.properties)

log.dirs=/var/lib/kafka/data/topics

2. in that folder, check the folder with "topicName-<partiotionNum>"

3. In that folder a file name --> somenum.log, --> tail it.

LOGS in Kafka

two type of logs in apache kafka

1. for storing all topics actual message,

configured via kafka/config/server.properties i.e. log.dirs

if not specifies, kafka takes as /tmp/kafka-logs

2. Storing all kafka internal application logs, info, error, debug

configured via -Dkafka.logs.dir=/some/path , option while starting kafka.

which is then used by log4j.properties to create different types of log files like controller.log etc

My question is, if we have missed to set specifying application log directory(i.e kafka.logs.dir) during kafka startup, what is the default application directory used by kafka ?

Ans:

$kafka_Home/kafka/logs/

server.log will store most of the messages

listeners=PLAINTEXT://myhost:9092,OUTSIDE://:9094

PLAINTEST : is just name of lister

myhost: is the host and port where broker bind itself, when it starts, that mean, it will listen to this IP/ethe0 interface and listen on this this 09092, if somebidy is sending any traffic, then it will process it, else it will not.

listener:

For internal Broker to Broker or zookeeper communication

advertised.listeners:

For external point of contact, like in cloud env, this will be external exposed IP and port, like NodePort IP:Port

Thank you.Well it was nice post and very helpful information on Big Data Hadoop Online Training

ReplyDeleteWelcome :)

ReplyDelete